What is Sana Video?

Sana Video is a small diffusion model designed to generate videos up to 720×1280 resolution with minute-length duration. It creates high-resolution, high-quality videos with strong text-video alignment at fast speeds, and can be deployed on RTX 5090 GPUs. Sana Video represents a significant step forward in making video generation more accessible and cost-effective.

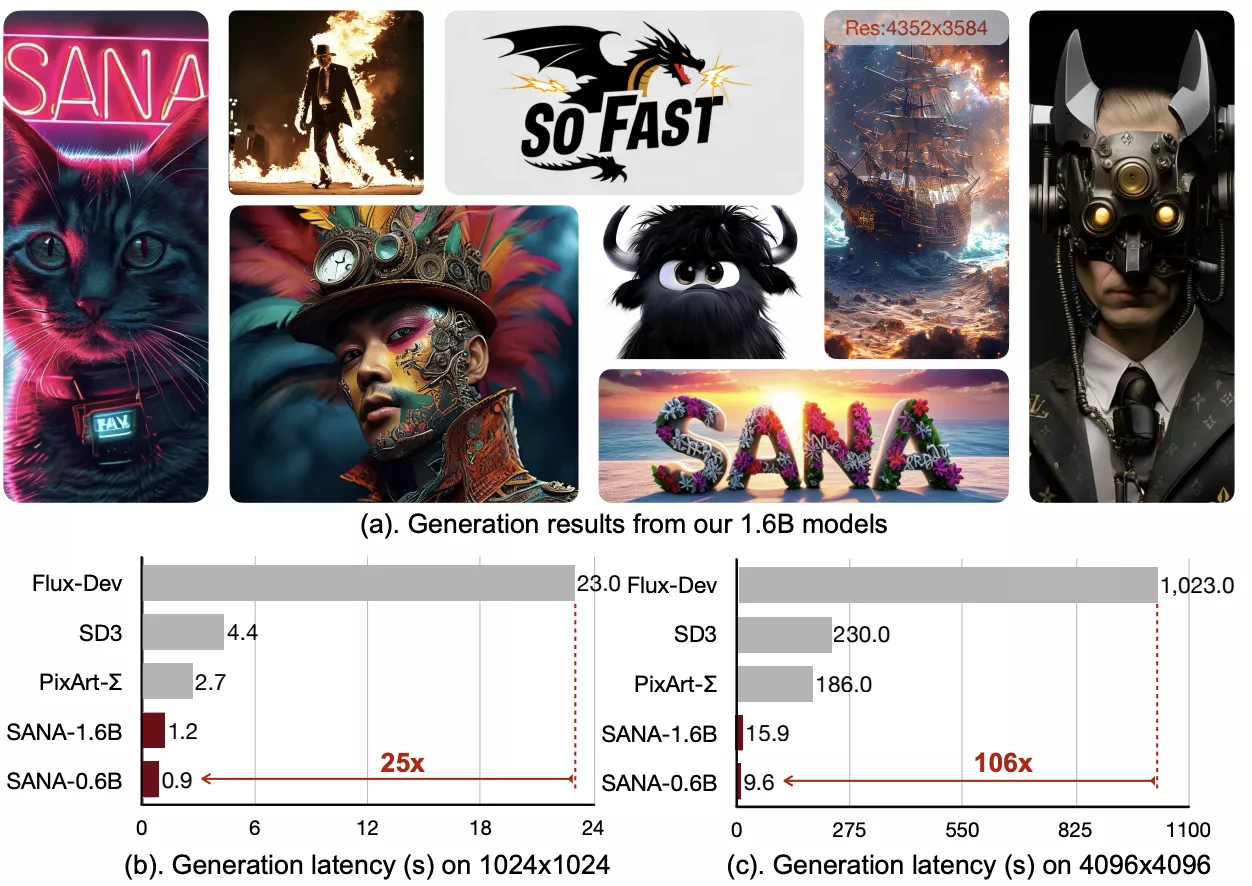

The model builds upon the foundation of Sana, a text-to-image framework that generates images up to 4096 × 4096 resolution. Sana Video extends these capabilities into the temporal dimension, allowing for the creation of dynamic video content from text descriptions or static images. The system synthesizes videos with strong alignment between text prompts and visual output, making it useful for content creators, researchers, and developers.

Two core designs enable efficient, effective, and long video generation. First, Linear DiT replaces vanilla attention with linear attention, which is more efficient when processing the large number of tokens required for video generation. Second, a constant-memory KV cache for Block Linear Attention enables minute-long video generation by employing a block-wise autoregressive approach with a fixed memory cost.

Overview of Sana Video

| Feature | Description |

|---|---|

| AI Tool | Sana Video |

| Category | Video Generation Framework |

| Function | Text-to-Video and Image-to-Video Generation |

| Maximum Resolution | 720×1280 pixels |

| Frame Rate | 16 frames per second |

| Maximum Duration | 1 minute |

| Generation Speed | 60 seconds latency for 5-second video |

| Hardware Requirements | RTX 5090 GPU (deployable) |

Technical Foundation

Sana Video builds on the technical innovations developed for Sana, the text-to-image framework. Understanding these foundations helps explain how Sana Video achieves its performance.

DC-AE Compression

Traditional autoencoders compress images by a factor of 8, but Sana uses a DC-AE that compresses images by 32 times. This significant compression reduces the number of latent tokens that need to be processed, making the system more efficient. For video generation, this compression is even more important because videos contain many frames, each requiring processing.

Linear Diffusion Transformer

Sana Video replaces vanilla attention mechanisms with linear attention in its Diffusion Transformer architecture. Linear attention is more efficient at high resolutions without sacrificing quality. When generating videos, the number of tokens increases dramatically compared to images, making linear attention essential for maintaining reasonable processing times.

Block Linear Attention with Constant-Memory KV Cache

For generating long videos, Sana Video employs a block-wise autoregressive approach with a constant-memory state derived from the cumulative properties of linear attention. This KV cache provides the Linear DiT with global context at a fixed memory cost, eliminating the need for a traditional KV cache that would grow with video length. This design enables efficient minute-long video generation.

Key Features of Sana Video

High-Resolution Video Generation

Sana Video generates videos at 720×1280 resolution, providing clear and detailed output suitable for various applications. The model maintains quality across different resolutions while keeping processing times reasonable.

Long-Duration Content

The system can generate videos up to one minute in length, making it suitable for creating longer-form content. The constant-memory KV cache design ensures that memory usage remains manageable even for extended videos.

Text-to-Video Generation

Users can generate videos from natural language descriptions. The model maintains strong alignment between text prompts and visual output, ensuring that generated videos match the intended description.

Image-to-Video Generation

Sana Video can animate static images, bringing still frames to life. This capability allows users to create dynamic content from existing images, adding motion and temporal dynamics to static visuals.

Efficient Processing

The linear attention mechanism and efficient compression reduce computational requirements compared to traditional approaches. This efficiency makes video generation more accessible and cost-effective.

Fast Generation Speed

Sana Video generates content at speeds that are competitive with or faster than other models of similar size. The system can produce a 5-second 720p video with 60 seconds of latency, and with NVFP4 precision on RTX 5090 GPUs, this can be reduced to 29 seconds.

Cost-Effective Training

The training process requires 12 days on 64 H100 GPUs, which represents only 1% of the cost of training larger models like MovieGen. This lower training cost makes the technology more accessible to researchers and organizations.

Competitive Performance

Sana Video achieves performance comparable to modern state-of-the-art small diffusion models such as Wan 2.1-1.3B and SkyReel-V2-1.3B, while being 16 times faster in measured latency. This combination of quality and speed makes it practical for real-world applications.

Applications and Use Cases

Content Creation

Content creators can use Sana Video to generate video content from text descriptions or animate static images. This capability supports various creative projects, from social media content to longer-form video productions.

Prototyping and Previsualization

Filmmakers and video producers can use Sana Video to quickly prototype ideas and create previsualization content. The fast generation speed allows for rapid iteration during the creative process.

Educational Content

Educators can generate video content to illustrate concepts, create animated explanations, or produce visual aids for teaching materials. The text-to-video capability makes it easy to create content from written descriptions.

Research and Development

Researchers working on video generation, computer vision, or related fields can use Sana Video as a tool for experimentation. The model's efficiency and performance make it suitable for research applications.

World Simulation and Physical Intelligence

Sana Video demonstrates capabilities in world simulation and physical intelligence, showing how generated videos can represent realistic physical interactions and environmental dynamics. This makes it useful for applications requiring realistic video content.

Performance and Efficiency

Sana Video achieves its performance through several design choices that balance quality, speed, and resource requirements. The model's efficiency comes from its linear attention mechanism, which reduces computational complexity compared to standard attention methods.

The training process benefits from effective data filters and model training strategies that reduce the overall training cost. By narrowing the training cost to 12 days on 64 H100 GPUs, Sana Video becomes more accessible than models requiring significantly more computational resources.

In terms of inference speed, Sana Video generates content faster than many comparable models. The system can produce videos with latency that is 16 times lower than some competing models, making it more practical for real-time or near-real-time applications.

The deployment on RTX 5090 GPUs with NVFP4 precision further improves inference speed. This quantization approach reduces the latency for generating a 5-second 720p video from 71 seconds to 29 seconds, representing a 2.4 times speedup. This makes the technology more accessible to users with consumer-grade hardware.

Comparison with Other Models

Sana Video compares favorably with other small diffusion models in the video generation space. When compared to models like Wan 2.1-1.3B and SkyReel-V2-1.3B, Sana Video achieves competitive performance while offering significantly faster generation speeds.

The model's efficiency comes from its linear attention design, which scales better to high resolutions and long sequences than traditional attention mechanisms. This design choice allows Sana Video to maintain quality while reducing computational requirements.

In terms of training cost, Sana Video requires only 1% of the computational resources needed for training larger models like MovieGen. This lower barrier to entry makes the technology more accessible to researchers and organizations with limited computational budgets.

Technical Details

Sana Video uses a block linear diffusion transformer architecture that processes video frames efficiently. The model employs linear attention throughout, which reduces the computational complexity from quadratic to linear in the number of tokens.

The constant-memory KV cache design enables long video generation by maintaining a fixed memory footprint regardless of video length. This is achieved through the cumulative properties of linear attention, which allow the model to compress historical information into a constant-size state.

The training process incorporates effective data filtering and selection strategies that improve convergence and reduce training time. These strategies help the model learn from high-quality examples while avoiding overfitting to noisy or low-quality data.

For inference, the model supports both text-to-video and image-to-video generation modes. The text-to-video mode uses a decoder-only text encoder that processes natural language descriptions, while the image-to-video mode takes static images as input and generates temporal dynamics.

Pros and Cons

Pros

- High-resolution video generation up to 720×1280

- Long-duration content generation up to one minute

- Fast generation speed compared to similar models

- Cost-effective training process

- Strong text-video alignment

- Support for both text-to-video and image-to-video modes

- Efficient memory usage with constant-memory KV cache

- Deployable on consumer-grade GPUs with quantization

Cons

- Maximum resolution limited to 720×1280

- Frame rate fixed at 16 frames per second

- Requires GPU hardware for optimal performance

- Generation time increases with video length

- Quality may vary depending on input complexity

How to Use Sana Video?

Step 1: Prepare Your Input

For text-to-video generation, prepare a clear text description of the video you want to create. For image-to-video generation, have a static image ready that you want to animate.

Step 2: Set Generation Parameters

Configure the resolution, duration, and other parameters for your video. Sana Video supports resolutions up to 720×1280 and durations up to one minute.

Step 3: Generate the Video

Submit your input to the Sana Video model. The system will process your request and generate the video content based on your specifications.

Step 4: Review and Refine

Review the generated video and make adjustments to your input if needed. You can refine your text prompt or try different images to achieve the desired result.

Step 5: Export Your Video

Once satisfied with the output, export your video in the desired format. The generated content can be used in your projects or further edited with video editing software.